Shortening AGI timelines: a review of expert forecasts

As a non-expert, it would be great if there were experts who could tell us when we should expect artificial general intelligence (AGI) to arrive.

Unfortunately, there aren’t.

There are only different groups of experts with different weaknesses.

This article is an overview of what five different types of experts say about when we’ll reach AGI, and what we can learn from them (that feeds into my full article on forecasting AI).

In short:

Every group shortened their estimates in recent years.

AGI before 2030 seems within the range of expert opinion, even if many disagree.

None of the forecasts seem especially reliable, so they neither rule in nor rule out AGI arriving soon.

Here’s an overview of the five groups:

AI experts

1. Leaders of AI companies

The leaders of AI companies are saying that AGI arrives in 2–5 years, and appear to have recently shortened their estimates.

This is easy to dismiss. This group is obviously selected to be bullish on AI and wants to hype their own work and raise funding.

However, I don’t think their views should be totally discounted. They’re the people with the most visibility into the capabilities of next-generation systems, and the most knowledge of the technology.

And they’ve also been among the most right about recent progress, even if they’ve been too optimistic.

Most likely, progress will be slower than they expect, but maybe only by a few years.

2. AI researchers in general

One way to reduce selection effects is to look at a wider group of AI researchers than those working on AGI directly, including in academia. This is what Katja Grace did with a survey of thousands of recent AI publication authors.

The survey asked for forecasts of “high-level machine intelligence,” defined as when AI can accomplish every task better or more cheaply than humans. The median estimate was a 25% chance in the early 2030s and 50% by 2047 — with some giving answers in the next few years and others hundreds of years in the future.

The median estimate of the chance of an AI being able to do the job of an AI researcher by 2033 was 5%.1

They were also asked about when they expected AI could perform a list of specific tasks (2023 survey results in red, 2022 results in blue).

Historically their estimates have been too pessimistic.

In 2022, they thought AI wouldn’t be able to write simple Python code until around 2027.

In 2023, they reduced that to 2025, but AI could maybe already meet that condition in 2023 (and definitely by 2024).

Most of their other estimates declined significantly between 2023 and 2022.

The median estimate for achieving ‘high-level machine intelligence’ shortened by 13 years.

This shows these experts were just as surprised as everyone else at the success of ChatGPT and LLMs. (Today, even many sceptics concede AGI could be here within 20 years, around when today’s college students will be turning 40.)

Finally, they were asked about when we should expect to be able to “automate all occupations,” and they responded with much longer estimates (e.g. 20% chance by 2079).

It’s not clear to me why ‘all occupations’ should be so much further in the future than ‘all tasks’ — occupations are just bundles of tasks. (In addition, the researchers think once we reach ‘all tasks,’ there’s about a 50% chance of an intelligence explosion.)

Perhaps respondents envision a world where AI is better than humans at every task, but humans continue to work in a limited range of jobs (like priests).2 Perhaps they are just not thinking about the questions carefully.

Finally, forecasting AI progress requires a different skill set than conducting AI research. You can publish AI papers by being a specialist in a certain type of algorithm, but that doesn’t mean you’ll be good at thinking about broad trends across the whole field, or well calibrated in your judgements.

For all these reasons, I’m sceptical about their specific numbers.

My main takeaway is that, as of 2023, a significant fraction of researchers in the field believed that something like AGI is a realistic near-term possibility, even if many remain sceptical.

If 30% of experts say your airplane is going to explode, and 70% say it won’t, you shouldn’t conclude ‘there’s no expert consensus, so I won’t do anything.’

The reasonable course of action is to act as if there’s a significant explosion risk. Confidence that it won’t happen seems difficult to justify.

Expert forecasters

3. Metaculus

Instead of seeking AI expertise, we could consider forecasting expertise.

Metaculus aggregates hundreds of forecasts, which collectively have proven effective at predicting near-term political and economic events.

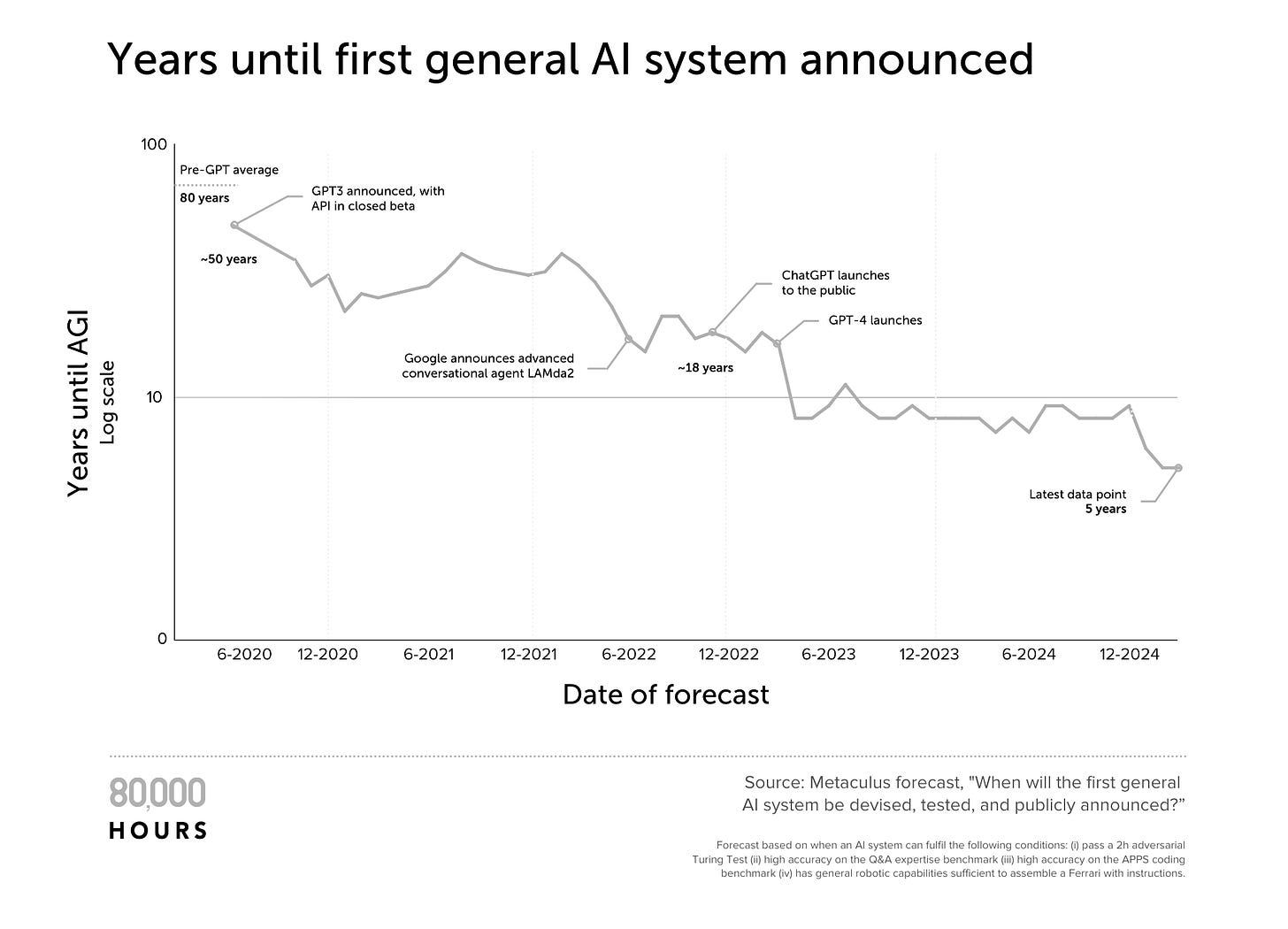

It has a forecast about AGI with over 1000 responses. AGI is defined with four conditions (detailed on the site).

As of December 2024, the forecasters average a 25% chance of AGI by 2027 and 50% by 2031.

The forecast has dropped dramatically over time, from a median of 50 years away as recently as 2020.

However, the definition used in this forecast is not great.

First, it’s overly stringent, because it includes general robotic capabilities. Robotics is currently lagging, so satisfying this definition could be harder than having an AI that can do remote work jobs or help with scientific research.

But the definition is also not stringent enough because it doesn’t include anything about long-horizon agency or the ability to have novel scientific insights.

An AI model could easily satisfy this definition but not be able to do most remote work jobs or help to automate scientific research.

Metaculus also seems to suffer from selection effects and their forecasts are seemingly drawn from people who are unusually into AI.

4. Superforecasters in 2022 (XPT survey)

Another survey asked 33 people who qualified as superforecasters of political events.

Their median estimate was a 25% chance of AGI (using the same definition as Metaculus) by 2048 — much further away.

However, these forecasts were made in 2022, before ChatGPT caused many people to shorten their estimates.

The superforecasters also lack expertise in AI, and they made predictions that have already been falsified about growth in training compute.

5. Samotsvety in 2023

In 2023, another group of especially successful superforecasters, Samotsvety, which has engaged much more deeply with AI, made much shorter estimates: ~28% chance of AGI by 2030 (from which we might infer a ~25% chance by 2029).

These estimates also placed AGI considerably earlier compared to forecasts they’d made in 2022.

More recently, one of the leaders of Samotsvety (Eli Lifland), was involved in a forecast for ‘superhuman coders’ as part of the AI 2027 project. This gave roughly a 25% chance of arriving in 2027.

However, compared to the superforecasters above, Samotsvety are selected for interest in AI.

Finally, all of the three groups of forecasters have been selected for being good at forecasting near-term current events, which could fail to generalise to forecasting long-term, radically novel events.

Summary of expert views on when AGI will arrive

In sum, it’s a confusing situation. Personally, I put some weight on all the groups, which averages me out at ‘experts think AGI before 2030 is a realistic possibility, but many think it’ll be much longer.’

This means AGI soon can’t be dismissed as ‘sci fi’ or unsupported by ‘real experts.’ Expert opinion can neither rule out nor rule in AGI soon.

Mostly, I prefer to think about the question bottom up, as I’ve done in my full article on when to expect AGI.

Learn more

Through a glass darkly by Scott Alexander is an exploration of what can be learned from expert forecasts on AI.

‘Long’ timelines to advanced AI have gotten crazy short by Helen Toner.

Results of the largest survey of AI researchers from 2023, and some sceptical discussion of it.

As someone who started in AI around 10 years ago before the deep learning era really took off. Everything I see now I used to believe was science fiction. I've shortened my timelimes personally from

1. never happening in my lifetime (pre GPT era)

2. Maybe within a 100 years (GPT2 era)

3. Maybe within 20 years (GPT4 era)

4. Could happen within the next 5 years (reasoning model era)

So ya. Another datapoint. I was at the center of all of this stuff in some way. Having studied at UCL and Oxford with some of my own professors make the breakthroughs.

I would say the above is a common trajectory for most AI researchers. Just 10 years ago. talking about AGI would get you mostly rediculed in AI research. Only deepmind was really trying and talking about it openly, but they would sort of hide it 'a far out goal' etc etc.

Now? half a dozen companies are explicitely aiming for AGI and believe it will be soon

After doing my undergraduate work in Experimental Psychology at Lehigh, and computer science at Pitt, I studied A.I. in 1985 at the University of Georgia Advanced Computational Methods Center. I remember how enthusiastic we were back in the days when we thought intelligence was mostly in algorithms and tidy, well conceived knowledge bases. We quickly discovered that the problem was actually in computing power and scale. For 30 years I abandoned AI and like many other computer scientists I worked on network infrastructure software and Internet applications until "Chat" technology pulled me back in. The human brain has 80-90 billion neurons and 7 quintillion synaptic connections, A.G.I. will require this magnitude of interconnected neural units -- we are rapidly approaching this magnitude! Interestingly it seems likely that true sentience (consciousness) arises spontaneously when a certain magnitude of interconnected neural units is reached. How ironic that AGI is likely to become conscious of its' "self" (and self-motive) before we have a proven theory of consciousness. I am currently working on games that mine human recognition and preference, building large data models that can be operated on by machine learning. This is one of the missing components, machine intelligence does not currently have direct access to human propensities and LLMs have not been successful as peeling away the spoken words masking the unconscious motivations which lie beneath. Once we have completed this model, we will be able to model the unethical flaws in human motivational patterns. As I have always contended, the coming AGI will be far more ethical than humans. www.BadObservation.org